How combining qualitative and quantitative research overcomes the limitations of each.

Quantitative experiments are a powerful tool for product development. However relying solely on quantitative methods misses valuable data. This wastes your product and development team’s time and costs your company money. Read on to learn why a mixed-methods approach is best for finding data to inform product decisions.

Some teams find it hard to justify qualitative research. Quant research methods are faster and can be cheaper. It may not be immediately obvious why we should bother with slower, more expensive, qualitative research.

To address this, I’ll explain some of the gaps left by relying solely on analytics, surveys and quantitative tests. As we’ll see qualitative research complements those studies. By working together, we can provide our teams ‘complete’ conclusions that are safe to take action on.

“Why did they do that?”

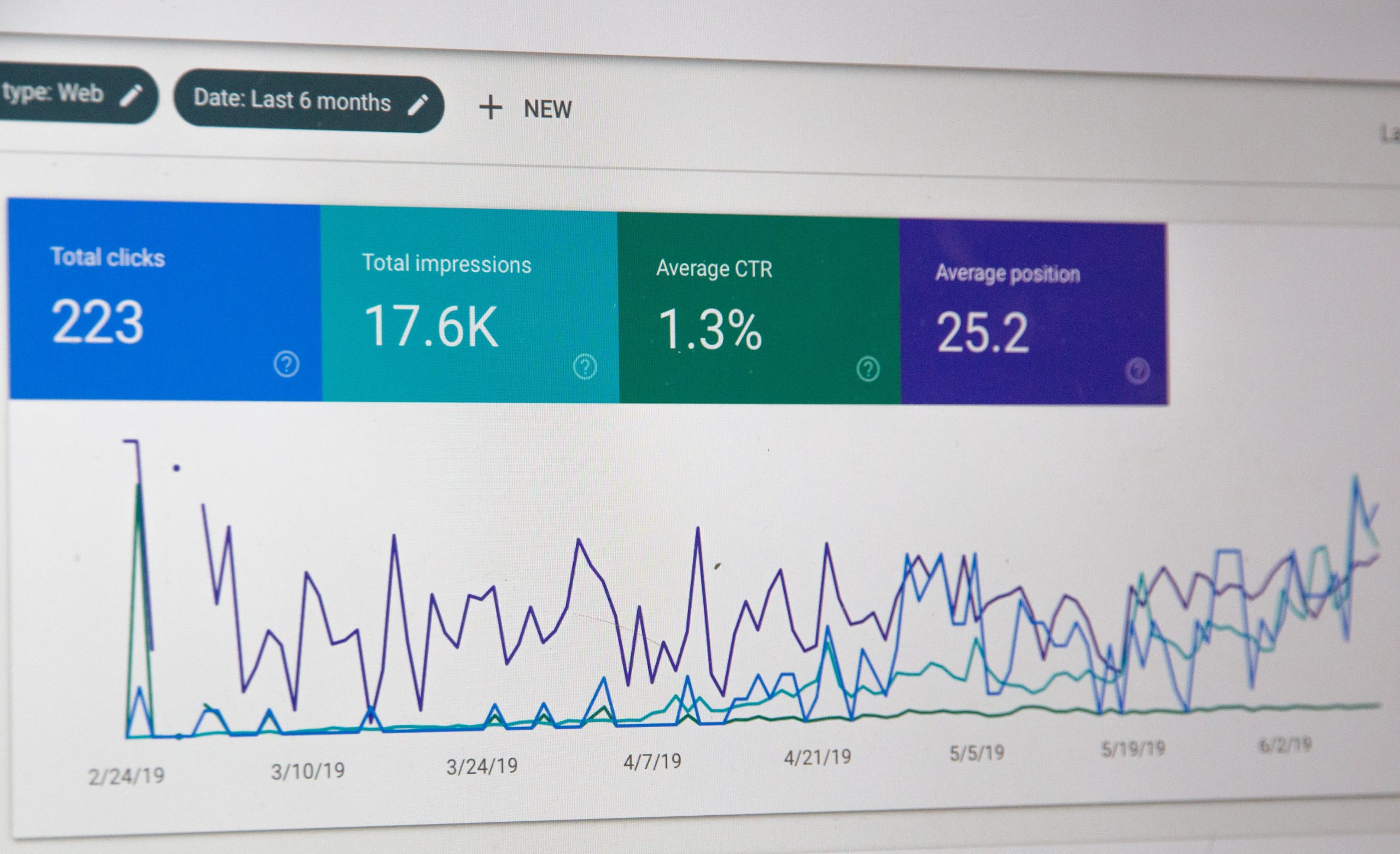

Analytics and A/B tests are great at revealing what users are currently doing on your site. This is extremely valuable when measuring the impact of changes you make. But they give no insight into why users are behaving that way. These methods also fail to show what users are doing immediately before and after they use your site.

Understanding how using your product fits into the user’s wider journey is essential to make informed decisions about what to do. We want to change user behaviour (to meet business goals such as encouraging them to use our service, click our buttons, etc,). Understanding the reason behind current behaviour will help us make the right interventions to change it.

All of these issues cause teams to make assumptions about user behaviour. Unproven assumptions risk incorrect decisions about how to change that behaviour. This wastes time, and costs money.

Although surveys can be used to ask “why did you do that”, they are subject to biases, such as participants wanting to give ‘pleasing’ or socially desirable answers. This can make the answers unreliable. I also frequently see the error where the participant’s understanding of the subject is different to the researcher. Sometimes neither recognises they are describing different things. This is very hard to notice in survey responses and reduces the validity of the responses.

Quantitative research understands ‘what behaviour do we see users doing’. Qualitative research, gathered through direct observation in moderated research understands ‘why do they do that’. Both together are a very strong combination to inform product decisions.

Learning too late

Reaching the number of users required to draw reliable quantitative conclusions requires a reasonably ‘complete’ experience. It relies on unmoderated sessions being performed by a large number of participants. This scale is often only possible later in development, compared to testing early on prototypes.

This creates wasted development time. Learning problems earlier, and being able to explore or dismiss ideas without creating expensive assets leads to quicker decision making, and less need to throw away ‘completed’ work.

Early decision making is best informed through an understanding of current user behaviour, and the issues they encounter, rather than hypothetical “what would you do” questions which are unreliable predictors of behaviour. An experienced user researcher and designer working together can identify business opportunities, and dead-ends early on in a project through running qualitative research. This can be then be combined with quantitative research as the product scales up.

Optimising for the local maximum

A/B tests are extremely good at polishing experiences. They give designers and product managers the ability to measure the impact of changes, and make iteration easy.

A/B tests are often focused on the impact of small changes – changing the button text, or the colour of a webpage (google famously tested 41 different shades of blue to measure the impact on their KPIs). However over time this can discourage teams from making significant changes that can’t be measured through the impact of small interventions. They end up with an extremely polished version of an imperfect solution. Moving beyond that imperfect solution is bigger than the difference between A/B versions, and is perceived as risky – teams become unable to escape their local maximum.

A/B tests will take you to the top of the smaller mountain, but can never get you across the valley to the larger mountain.

Qualitative research allows outside inspiration to disrupt the local maximum, and can give a steer on radically different changes that can eventually lead to a better solution. However it is less suitable for noticing the impact of small changes. Combining both methods overcome that limitation.

The limitations of qualitative research

Relying solely on qualitative research is also misguided. Although observational studies are good at understanding user behaviour, and identifying usability issues, they are less good at recognising how representative that behaviour is. ‘How big is this problem’ is important information for de-risking decision making.

To give ‘complete’ answers, researchers need to be aware of the limitations of each method, and be able to draw safe conclusions, while being transparent about the gaps of these conclusions. Combining qualitative and quantitative methods helps overcome the limitations of solely using only one method.

This doesn’t mean researchers have to be able to run A/B tests themselves, but they should be actively cultivating relationships with colleagues who can. By working in partnership they can provide complete answers to inform product decisions.

I wrote how to build and empower a new user research team, who can run insightful qualitative research studies in my book Building User Research Teams. Find the book on Amazon to learn more about how to run great qualitative research.

Leave a Reply